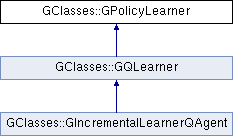

This is the base class for algorithms that learn a policy.

|

| | GPolicyLearner (const GRelation &relation, int actionDims) |

| | actionDims specifies how many dimensions are in the action vector. (For example, if your agent has a discrete set of ten possible actions, then actionDims should be 1, because it only takes one value to represent one of ten discrete actions. If your agent has four legs that can move independently to continuous locations relative to the position of the agent in 3D space, then actionDims should be 12, because it takes three values to represent the force or offset vector for each leg.) The number of sense dimensions is the number of attributes in pRelation minus actionDims. pRelation specifies the type of each element in the sense and action vectors (whether they are continuous or discrete). The first attributes refer to the senses, and the last actionDims attributes refer to the actions. More...

|

| |

| | GPolicyLearner (GDomNode *pNode) |

| |

| virtual | ~GPolicyLearner () |

| |

| void | onTeleport () |

| | If an external force changes the state of pSenses, you should call this method to inform the agent that the change is not a consequence of its most recent action. The agent should refrain from "learning" when refinePolicyAndChooseNextAction is next called. More...

|

| |

| virtual void | refinePolicyAndChooseNextAction (const double *pSenses, double *pOutActions)=0 |

| | This method tells the agent to learn from the current senses, and select a new action vector. (This method should also set m_teleported to false.) More...

|

| |

| void | setExplore (bool b) |

| | If b is false, then the agent will only exploit (and no learning will occur). If b is true, (which is the default) then the agent will seek some balance between exploration and exploitation. More...

|

| |

| GClasses::GPolicyLearner::GPolicyLearner |

( |

const GRelation & |

relation, |

|

|

int |

actionDims |

|

) |

| |

actionDims specifies how many dimensions are in the action vector. (For example, if your agent has a discrete set of ten possible actions, then actionDims should be 1, because it only takes one value to represent one of ten discrete actions. If your agent has four legs that can move independently to continuous locations relative to the position of the agent in 3D space, then actionDims should be 12, because it takes three values to represent the force or offset vector for each leg.) The number of sense dimensions is the number of attributes in pRelation minus actionDims. pRelation specifies the type of each element in the sense and action vectors (whether they are continuous or discrete). The first attributes refer to the senses, and the last actionDims attributes refer to the actions.