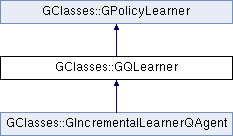

The base class of a Q-Learner. To use this class, there are four abstract methods you'll need to implement. See also the comment for GPolicyLearner.

|

| | GQLearner (const GRelation &relation, int actionDims, double *pInitialState, GRand *pRand, GAgentActionIterator *pActionIterator) |

| |

| virtual | ~GQLearner () |

| |

| virtual double | getQValue (const double *pState, const double *pAction)=0 |

| | You must implement some kind of structure to store q-values. This method should return the current q-value for the specified state and action. More...

|

| |

| virtual void | refinePolicyAndChooseNextAction (const double *pSenses, double *pOutActions) |

| | See GPolicyLearner::refinePolicyAndChooseNextAction. More...

|

| |

| void | setActionCap (int n) |

| | This specifies a cap on how many actions to sample. (If actions are continuous, you obviously don't want to try them all.) More...

|

| |

| void | setDiscountFactor (double d) |

| | Sets the factor for discounting future rewards (often called "gamma"). More...

|

| |

| void | setLearningRate (double d) |

| | Sets the learning rate (often called "alpha"). If state is deterministic and actions have deterministic consequences, then this should be 1. If there is any non-determinism, there are three common approaches for picking the learning rate: 1- use a fairly small value (perhaps 0.1), 2- decay it over time (by calling this method before every iteration), 3- remember how many times 'n' each state has already been visited, and set the learning rate to 1/(n+1) before each iteration. The third technique is the best, but is awkward with continuous state spaces. More...

|

| |

| virtual void | setQValue (const double *pState, const double *pAction, double qValue)=0 |

| | This is the complement to GetQValue. More...

|

| |

| | GPolicyLearner (const GRelation &relation, int actionDims) |

| | actionDims specifies how many dimensions are in the action vector. (For example, if your agent has a discrete set of ten possible actions, then actionDims should be 1, because it only takes one value to represent one of ten discrete actions. If your agent has four legs that can move independently to continuous locations relative to the position of the agent in 3D space, then actionDims should be 12, because it takes three values to represent the force or offset vector for each leg.) The number of sense dimensions is the number of attributes in pRelation minus actionDims. pRelation specifies the type of each element in the sense and action vectors (whether they are continuous or discrete). The first attributes refer to the senses, and the last actionDims attributes refer to the actions. More...

|

| |

| | GPolicyLearner (GDomNode *pNode) |

| |

| virtual | ~GPolicyLearner () |

| |

| void | onTeleport () |

| | If an external force changes the state of pSenses, you should call this method to inform the agent that the change is not a consequence of its most recent action. The agent should refrain from "learning" when refinePolicyAndChooseNextAction is next called. More...

|

| |

| void | setExplore (bool b) |

| | If b is false, then the agent will only exploit (and no learning will occur). If b is true, (which is the default) then the agent will seek some balance between exploration and exploitation. More...

|

| |

|

| virtual void | chooseAction (const double *pSenses, double *pOutActions)=0 |

| | This method picks the action during training. This method is called by refinePolicyAndChooseNextAction. (If it makes things easier, the agent may actually perform the action here, but it's a better practise to wait until refinePolicyAndChooseNextAction returns, because that keeps the "thinking" and "acting" stages separated from each other.) One way to pick the next action is to call GetQValue for all possible actions in the current state, and pick the one with the highest Q-value. But if you always pick the best action, you'll never discover things you don't already know about, so you need to find some balance between exploration and exploitation. One way to do this is to usually pick the best action, but sometimes pick a random action. More...

|

| |

| virtual double | rewardFromLastAction ()=0 |

| | A reward is obtained when the agent performs a particular action in a particular state. (A penalty is a negative reward. A reward of zero is no reward.) This method returns the reward that was obtained when the last action was performed. If you return UNKNOWN_REAL_VALUE, then the q-table will not be updated for that action. More...

|

| |

| GDomNode * | baseDomNode (GDom *pDoc) |

| | If a child class has a serialize method, it should use this method to serialize the base-class stuff. More...

|

| |

| virtual void GClasses::GQLearner::chooseAction |

( |

const double * |

pSenses, |

|

|

double * |

pOutActions |

|

) |

| |

|

protectedpure virtual |

This method picks the action during training. This method is called by refinePolicyAndChooseNextAction. (If it makes things easier, the agent may actually perform the action here, but it's a better practise to wait until refinePolicyAndChooseNextAction returns, because that keeps the "thinking" and "acting" stages separated from each other.) One way to pick the next action is to call GetQValue for all possible actions in the current state, and pick the one with the highest Q-value. But if you always pick the best action, you'll never discover things you don't already know about, so you need to find some balance between exploration and exploitation. One way to do this is to usually pick the best action, but sometimes pick a random action.

Implemented in GClasses::GIncrementalLearnerQAgent.

| void GClasses::GQLearner::setLearningRate |

( |

double |

d | ) |

|

Sets the learning rate (often called "alpha"). If state is deterministic and actions have deterministic consequences, then this should be 1. If there is any non-determinism, there are three common approaches for picking the learning rate: 1- use a fairly small value (perhaps 0.1), 2- decay it over time (by calling this method before every iteration), 3- remember how many times 'n' each state has already been visited, and set the learning rate to 1/(n+1) before each iteration. The third technique is the best, but is awkward with continuous state spaces.

Public Member Functions inherited from GClasses::GPolicyLearner

Public Member Functions inherited from GClasses::GPolicyLearner Protected Member Functions inherited from GClasses::GPolicyLearner

Protected Member Functions inherited from GClasses::GPolicyLearner Protected Attributes inherited from GClasses::GPolicyLearner

Protected Attributes inherited from GClasses::GPolicyLearner