|

| class | ArrayHolder |

| | Just like Holder, except for arrays. More...

|

| |

| struct | ArrayWrapper |

| |

| struct | ComplexNumber |

| |

| class | Ex |

| | The class of all exceptions thrown by this library A simple exception object that wraps a string message. More...

|

| |

| class | FileHolder |

| | Closes a file when this object goes out of scope. More...

|

| |

| class | G2DRegionGraph |

| | Implements a region adjacency graph for 2D images, and lets you merge similar regions to create a hierarchical breakdown of the image. More...

|

| |

| class | G3dLetterMaker |

| |

| class | G3DMatrix |

| | Represents a 3x3 matrix. More...

|

| |

| class | G3DVector |

| | Represents a 3D vector. More...

|

| |

| class | GActionPath |

| |

| class | GActionPathSearch |

| | This is the base class of search algorithms that can only perform a discreet set of actions (as opposed to jumping to anywhere in the search space), and seeks to minimize the error of a path of actions. More...

|

| |

| class | GActionPathState |

| |

| class | GAdamOptimizer |

| | Trains a neural network by ADAM. See Diederik P. Kingma and Jimmy Lei Ba, "Adam: A Method for Stochastic Optimization", 2015. More...

|

| |

| class | GAgentActionIterator |

| | Iterates through all the actions that are valid in the current state. If actions are continuous or very numerous, this should sample valid actions in a random order. The caller may decide that it has sampled enough at any time. More...

|

| |

| class | GAgglomerativeClusterer |

| | This merges each cluster with its closest neighbor. (The distance between clusters is computed as the distance between the closest members of the clusters times (n^b), where n is the total number of points from both clusters, and b is a balancing factor. More...

|

| |

| class | GAgglomerativeTransducer |

| | This is a semi-supervised agglomerative clusterer. It can only handle one output, and it must be nominal. All inputs must be continuous. Also, it assumes that all output values are represented in the training set. More...

|

| |

| class | GAnnealing |

| | Perturbs the current vector in a random direction. If it made the vector worse, restores the previous vector. Decays the deviation of perturbation over time. More...

|

| |

| class | GApp |

| | Contains some generally useful functions for launching applications. More...

|

| |

| class | GArffAttribute |

| |

| class | GArffRelation |

| | ARFF = Attribute-Relation File Format. This stores richer information than GRelation. This includes a name, a name for each attribute, and names for each supported nominal value. More...

|

| |

| class | GArgReader |

| | Parses command-line args and provides methods to conveniently process them. More...

|

| |

| class | GAssignment |

| | An abstract base class defining an assignment between two sets. More...

|

| |

| class | GAtomicCycleFinder |

| | This finds all of the atomic cycles (cycles that cannot be divided into two smaller cycles) in a graph. More...

|

| |

| class | GAttributeSelector |

| | Generates subsets of data that contain only the most relevant features for predicting the labels. The train method of this class produces a ranked ordering of the feature attributes by training a single-layer neural network, and deselecting the weakest attribute until all attributes have been deselected. The transform method uses only the highest-ranked attributes. More...

|

| |

| class | GAutoFilter |

| |

| class | GBag |

| | BAG stands for bootstrap aggregator. It represents an ensemble of voting models. Each model is trained with a slightly different training set, which is produced by drawing randomly from the original training set with replacement until we have a new training set of the same size. Each model is given equal weight in the vote. More...

|

| |

| class | GBagOfRecommenders |

| | This class performs bootstrap aggregation with collaborative filtering algorithms. More...

|

| |

| class | GBallTree |

| | An efficient algorithm for finding neighbors. Empirically, this class seems to be a little bit slower than GKdTree. More...

|

| |

| class | GBaselineLearner |

| | Always outputs the label mean (for continuous labels) and the most common class (for nominal labels). More...

|

| |

| class | GBaselineRecommender |

| | This class always predicts the average rating for each item, no matter to whom it is making the recommendation. The purpose of this algorithm is to serve as a baseline for comparison. More...

|

| |

| class | GBayesianModelAveraging |

| | This is an ensemble that uses the bagging approach for training, and Bayesian Model Averaging to combine the models. That is, it trains each model with data drawn randomly with replacement from the original training data. It combines the models with weights proporitional to their likelihood as computed using Bayes' law. More...

|

| |

| class | GBayesianModelCombination |

| |

| class | GBayesNet |

| | This class provides a platform for Bayesian belief networks. It allocates nodes in its own heap using placement new, so you don't have to worry about deleting the nodes. You can allocate your nodes manually and use them separately from this class if you want, but it is a lot easier if you use this class to manage it all. More...

|

| |

| class | GBetaDistribution |

| | The Beta distribution. More...

|

| |

| class | GBezier |

| | Represents a Bezier curve. More...

|

| |

| class | GBigInt |

| | Represents an integer of arbitrary size, and provides basic arithmetic functionality. Also contains functionality for implementing RSA symmetric-key cryptography. More...

|

| |

| class | GBillboard |

| | This is a billboard (a 2-D image in a 3-D world) for use with GBillboardWorld. You can set m_repeatX and/or m_repeatY to make the image repeat across the billboard. More...

|

| |

| class | GBillboardWorld |

| | This class represents a world of billboards, and provides a rendering engine. More...

|

| |

| struct | GBitReverser_imp |

| | Template used for reversing numBits of type T. You shouldn't need this, use the function reverseBits. More...

|

| |

| struct | GBitReverser_imp< T, 1 > |

| | Base case of template used for reversing numBits of type T. You shouldn't need this, use the function reverseBits. More...

|

| |

| class | GBits |

| | Contains various functions for bit analysis. More...

|

| |

| class | GBitTable |

| | Represents a table of bits. More...

|

| |

| class | GBlobIncoming |

| | This class is for deserializing blobs. It takes care of Endianness issues and protects against buffer overruns. This class would be particularly useful for writing a network protocol. More...

|

| |

| class | GBlobOutgoing |

| | This class is for serializing objects. It is the complement to GBlobIncoming. More...

|

| |

| class | GBlobQueue |

| | This is a special queue for handling blobs that come in and go out in varying sizes. It is particulary designed for streaming things that must travel or be parsed in packets that may differ in size from how they are sent or transmitted. More...

|

| |

| class | GBlock |

| | Represents a block of network units (artificial neurons) in a neural network. More...

|

| |

| class | GBlockActivation |

| | The base class of blocks that apply an activation function, such as tanh, in an element-wise manner. More...

|

| |

| class | GBlockAllPairings |

| | Consumes n values and produces a big vector in the form of two vectors (concatenated together) whose corresponding values form all pairs of inputs, plus each input is also paired with each of "lo" and "hi". Example: If the input is {1,2,3}, then the output will be {1,1,1,1,2,2,2,3,3, 2,3,lo,hi,3,lo,hi,lo,hi}. Thus, if there are n inputs, there will be n(n-1)+4n outputs. More...

|

| |

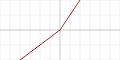

| class | GBlockBentIdentity |

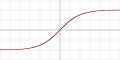

| | Applies the Bent identity element-wise to the input.

| Equation | Plot |

![\[ f(x) = \frac{\sqrt{x^2+1}-1}{2}+x \]](form_4.png)

|

|

More...

|

| |

| class | GBlockConvolutional1D |

| |

| class | GBlockConvolutional2D |

| |

| class | GBlockFeatureSelector |

| | A linear block with no bias. All weights are constrained to fall between 0 and 1, and to sum to 1. Regularization is implicitly applied during training to drive the weights such that each output will settle on giving a weight of 1 to exactly one input unit. More...

|

| |

| class | GBlockFuzzy |

| | A block of fuzzy logic units. Treats the inputs as two concatenated vectors, whose corresponding values each form a pair to be combined with fuzzy logic to produce one output value. Example: The input {1,2,3,4,5,6} will apply fuzzy logic to the three pairs {1,4}, {2,5} and {3,6} to produce a vector of 3 output values. More...

|

| |

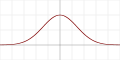

| class | GBlockGaussian |

| | Applies the Gaussian function element-wise to the input.

| Equation | Plot |

![\[ f(x) = e^{-x^2} \]](form_5.png)

|

|

More...

|

| |

| class | GBlockGRU |

| | A block of Gated Recurrent Units. More...

|

| |

| class | GBlockIdentity |

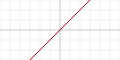

| | Applies the Identity function element-wise to the input. Serves as a pass-through block of units in a neural network.

| Equation | Plot |

![\[ f(x) = x \]](form_0.png)

|

|

More...

|

| |

| class | GBlockLeakyRectifier |

| | Applies the Leaky rectified linear unit (Leaky ReLU) element-wise to the input.

| Equation | Plot |

![\[ f(x) = \left \{ \begin{array}{rcl} 0.01x & \mbox{for} & x < 0\\ x & \mbox{for} & x \ge 0\end{array} \right. \]](form_8.png)

|

|

More...

|

| |

| class | GBlockLinear |

| | Standard fully-connected block of weights. Often followed by a GBlockActivation. More...

|

| |

| class | GBlockLogistic |

| | Applies the Logistic function element-wise to the input.

| Equation | Plot |

![\[ f(x) = \frac{1}{1 + e^{-x}} \]](form_3.png)

|

|

More...

|

| |

| class | GBlockLSTM |

| | A classic Long Short Term Memory block (with coupled forget and input gates) More...

|

| |

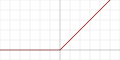

| class | GBlockRectifier |

| | Applies the Rectified linear unit (ReLU) element-wise to the input.

| Equation | Plot |

![\[ f(x) = \left \{ \begin{array}{rcl} 0 & \mbox{for} & x < 0 \\ x & \mbox{for} & x \ge 0\end{array} \right. \]](form_7.png)

|

|

More...

|

| |

| class | GBlockRecurrent |

| | Base class of recurrent blocks. More...

|

| |

| class | GBlockRestrictedBoltzmannMachine |

| |

| class | GBlockScalarProduct |

| | Treats the input as two concatenated vectors. Multiplies each corresponding pair of values together to produce the output. More...

|

| |

| class | GBlockScalarSum |

| | Treats the input as two concatenated vectors. Adds each corresponding pair of values together to produce the output. More...

|

| |

| class | GBlockScaledTanh |

| | Applies a scaled TanH function element-wise to the input.

| Equation |

![\[ f(x) = tanh(x \times 0.66666667) \times 1.7159\]](form_2.png)

|

LeCun et al. suggest scale_in=2/3 and scale_out=1.7159. By carefully matching scale_in and scale_out, the nonlinearity can also be tuned to preserve the mean and variance of its input: More...

|

| |

| class | GBlockSigExp |

| | An element-wise nonlinearity block This activation function forms a sigmoid shape by splicing exponential and logarithmic functions together. More...

|

| |

| class | GBlockSine |

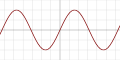

| | Applies the Sinusoid element-wise to the input.

| Equation | Plot |

![\[ f(x) = \sin(x) \]](form_6.png)

|

|

More...

|

| |

| class | GBlockSoftExp |

| | A parameterized activation function (a.k.a. adaptive transfer function). More...

|

| |

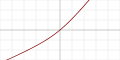

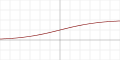

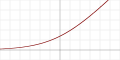

| class | GBlockSoftPlus |

| | Applies the SoftPlus function element-wise to the input.

| Equation | Plot |

![\[ f(x)=\ln(1+e^x) \]](form_9.png)

|

|

More...

|

| |

| class | GBlockSoftRoot |

| | An element-wise nonlinearity block. This function is shaped like a sigmoid, but with both a co-domain and domain that spans the continuous values. At very negative values, it is shaped like y=-sqrt(-2x). Near zero, it is shaped like y=x. At very positive values, it is shaped like y=sqrt(2x). More...

|

| |

| class | GBlockSparse |

| | A layer with random sparse connections. More...

|

| |

| class | GBlockSwitch |

| | Treats the input as three concatenated vectors: a, b, and c. (The values in 'a' typically fall in the range [0,1].) The output is computed element-wise as a*b + (1-a)*c. More...

|

| |

| class | GBlockTanh |

| | Applies the TanH function element-wise to the input.

| Equation | Plot |

![\[ f(x) = tanh(x) \]](form_1.png)

|

|

More...

|

| |

| class | GBlockWeightless |

| | The base class of blocks that have no weights. More...

|

| |

| class | GBNBeta |

| | A node in a belief network that represents a beta distribution. More...

|

| |

| class | GBNCategorical |

| | A node in a belief network that represents a categorical distribution. Instances of this class can serve as parent-nodes to any GBNVariable node. The child node must specify distribution parameters for every category that the parent node supports (or for every combination of categories if there are multiply categorical parents). More...

|

| |

| class | GBNConstant |

| | A node in a belief network that represents a constant value. More...

|

| |

| class | GBNExponential |

| | A node in a belief network that represents an exponential distribution. More...

|

| |

| class | GBNGamma |

| | A node in a belief network that represents a gamma distribution. More...

|

| |

| class | GBNInverseGamma |

| | A node in a belief network that represents an inverse-gamma distribution. More...

|

| |

| class | GBNLogNormal |

| | A node in a belief network that represents a lognormal distribution. More...

|

| |

| class | GBNMath |

| | A node in a belief network that applies some math operation to the output of its one parent node. More...

|

| |

| class | GBNMetropolisNode |

| | This is the base class for nodes in a belief network that are sampled using the Metropolis algorithm. More...

|

| |

| class | GBNNode |

| | The base class of all nodes in a Bayesian belief network. More...

|

| |

| class | GBNNormal |

| | A node in a belief network that represents a Gaussian, or normal, distribution. More...

|

| |

| class | GBNPareto |

| | A node in a belief network that represents a Pareto distribution. More...

|

| |

| class | GBNPoisson |

| | A node in a belief network that represents a Poisson distribution. More...

|

| |

| class | GBNProduct |

| | A node in a belief network that always returns the product of its parent nodes. More...

|

| |

| class | GBNSum |

| | A node in a belief network that always returns the sum of its parent nodes. More...

|

| |

| class | GBNUniformContinuous |

| | A node in a belief network that represents a uniform continuous distribution. More...

|

| |

| class | GBNUniformDiscrete |

| | A node in a belief network that represents a uniform discrete distribution. More...

|

| |

| class | GBNVariable |

| | The base class of nodes in a belief network that represent variable values. More...

|

| |

| class | GBomb |

| | An experimental ensemble technique. More...

|

| |

| class | GBouncyBalls |

| |

| class | GBrandesBetweennessCentrality |

| | Computes the number of times that the shortest-path between every pair of points passes over each edge and vertex. More...

|

| |

| class | GBreadthFirstUnfolding |

| | A manifold learning algorithm that reduces dimensionality in local neighborhoods, and then stitches the reduced local neighborhoods together using the Kabsch algorithm. More...

|

| |

| class | GBruteForceNeighborFinder |

| | Finds neighbors by measuring the distance to all points. This one should work properly even if the distance metric does not support the triangle inequality. More...

|

| |

| class | GBucket |

| | When Train is called, this performs cross-validation on the training set to determine which learner is the best. It then trains that learner with the entire training set. More...

|

| |

| class | GCalibrator |

| |

| class | GCamera |

| | This camera assumes the canvas is specified in cartesian coordinates. The 3D space is based on a right-handed coordinate system. (So if x goes to the right and y goes up, then z comes out of the screen toward you.) More...

|

| |

| class | GCategoricalDistribution |

| | This is a distribution that specifies a probability for each value in a set of nominal values. More...

|

| |

| class | GCategoricalSampler |

| | This class is for efficiently drawing random values from a categorical distribution with a large number of categories. More...

|

| |

| class | GCategoricalSamplerBatch |

| |

| class | GCharSet |

| | This class represents a set of characters. More...

|

| |

| class | GClusterer |

| | The base class for clustering algorithms. Classes that inherit from this class must implement a method named "cluster" which performs clustering, and a method named "whichCluster" which reports which cluster the specified row is determined to be a member of. More...

|

| |

| class | GCollaborativeFilter |

| | The base class for collaborative filtering recommender systems. More...

|

| |

| class | GCompressor |

| | This implements a simple compression/decompression algorithm. More...

|

| |

| class | GConstStringHashTable |

| | Hash table based on keys of constant strings (or at least strings that won't change during the lifetime of the hash table). It's a good idea to use a GHeap in connection with this class. More...

|

| |

| class | GConstStringToIndexHashTable |

| | Hash table based on keys of constant strings (or at least strings that won't change during the lifetime of the hash table). It's a good idea to use a GHeap in connection with this class. More...

|

| |

| class | GConstVecWrapper |

| | This class temporarily wraps a GVec around a const array of doubles. You should take care to ensure this object is destroyed before the array it wraps. More...

|

| |

| class | GContentBasedFilter |

| |

| class | GContentBoostedCF |

| |

| class | GContext |

| | The base class for the buffers that a thread needs to use (train or predict with) a neural network component. More...

|

| |

| class | GContextGRU |

| | Context class for GRU blocks. More...

|

| |

| class | GContextLayer |

| | Contains the buffers that a thread needs to train or use a GLayer. Each thread should use a separate GContextLayer object. Call GLayer::newContext to obtain a new GContextLayer object. More...

|

| |

| class | GContextLSTM |

| | Context class for LSTM blocks. More...

|

| |

| class | GContextNeuralNet |

| | Contains the buffers that a thread needs to train or use a GNeuralNet. Each thread should use a separate GContextNeuralNet object. Call GNeuralNet::newContext to obtain a new GContextNeuralNet object. More...

|

| |

| class | GContextRecurrent |

| | A special context object for recurrent blocks. More...

|

| |

| class | GContextRecurrentInstance |

| | A single instance of a recurrent context that has been unfolded through time. More...

|

| |

| class | GCoordVectorIterator |

| | An iterator for an n-dimensional coordinate vector. For example, suppose you have a 4-dimensional 2x3x2x1 grid, and you want to iterate through its coordinates: (0000, 0010, 0100, 0110, 0200, 0210, 1000, 1010, 1100, 1110, 1200, 1210). This class will iterate over coordinate vectors in this manner. (For 0-dimensional coordinate vectors, it behaves as though the origin is the only valid coordinate.) More...

|

| |

| class | GCosineSimilarity |

| | This is a similarity metric that computes the cosine of the angle bewtween two sparse vectors. More...

|

| |

| class | GCrypto |

| | This is a symmetric-key block-cypher. It utilizes a 2048-byte internal state which is initialized using the passphrase. It uses repeated applications of sha-512 to advance the internal state, and to generate an 1024-byte pad that it xor's with your data to encrypt or decrypt it. Warning: You use this algorithm at your own risk. Many encryption algorithms eventually turn out to be insecure, and to my knowledge, this algorithm has not yet been extensively scrutinized. More...

|

| |

| class | GCSVParser |

| | A class for parsing CSV files (or tab-separated files, or whitespace separated files, etc.). (This class does not support Mac line endings, so you should replace all '' with '

' before using this class if your data comes from a Mac.) More...

|

| |

| class | GCycleCut |

| | This finds the shortcuts in a table of neighbors and replaces them with INVALID_INDEX. More...

|

| |

| class | GDataAugmenter |

| | This class augments data. That is, it transforms the data according to some provided transformation, and attaches the transformed data as new attributes to the existing data. More...

|

| |

| class | GDataColSplitter |

| | This class divides a matrix into two parts. The left-most columns are the features. The right-most columns are the labels. More...

|

| |

| class | GDataPreprocessor |

| | This class facilitates automatic data preprocessing. The constructor requires a dataset and some parameters that specify which portion of the data to use, and how the data should be transformed. A transform will automatically be generated to preprocess the data to meet the specified requirements, and the data will be transformed. Additional datasets can be added with the "add" method. These will be processed by the same transform. The processed data can be retrieved with the "get" method, with an index corresponding to the order of the data. More...

|

| |

| class | GDataRowSplitter |

| | This class divides a features and labels matrix into two parts by randomly assigning each row to one of the two parts, keeping the corresponding rows together. The rows are shallow-copied. The destructor of this class releases all of the row references. More...

|

| |

| class | GDecisionTree |

| | This is an efficient learning algorithm. It divides on the attributes that reduce entropy the most, or alternatively can make random divisions. More...

|

| |

| class | GDenseClusterRecommender |

| | This class clusters the rows according to a dense distance metric, then uses the baseline vector in each cluster to make predictions. More...

|

| |

| class | GDenseCosineDistance |

| | Returns 1 minus the cosine of the angle between the two vectors with the origin. More...

|

| |

| class | GDiff |

| | This class finds the differences between two text files It is case and whitespace sensitive, but is tolerant of Unix/Windows/Mac line endings. It uses lines as the atomic unit. It accepts matching lines in a greedy manner. More...

|

| |

| struct | GDiffLine |

| | This is a helper struct used by GDiff. More...

|

| |

| class | GDijkstra |

| | Finds the shortest path from an origin vertex to all other vertices. Implemented with a binary-heap priority-queue. If the graph is sparse on edges, it will run in about O(n log(n)) time. If the graph is dense, it runs in about O(n^2 log(n)) More...

|

| |

| class | GDirList |

| | This class contains a list of files and a list of folders. The constructor populates these lists with the names of files and folders in the current working directory. More...

|

| |

| class | GDiscreteActionIterator |

| | This is a simple and common action iterator that can be used when there is a discrete set of possible actions. More...

|

| |

| class | GDiscretize |

| | This transform uses buckets to convert continuous data into discrete data. It is common to use GFilter to combine this with your favorite modeler (which only supports discrete values) to create a modeler that can also support continuous values as well. More...

|

| |

| class | GDistanceMetric |

| | This class enables you to define a distance (or dissimilarity) metric between two vectors. pScaleFactors is an optional parameter (it can be NULL) that lets the calling class scale the significance of each dimension. Distance metrics that do not mix with this concept may simply ignore any scale factors. Typically, classes that use this should be able to assume that the triangle inequality will hold, but do not necessarily enforce the parallelogram law. More...

|

| |

| class | GDistribution |

| |

| class | GDom |

| | A Document Object Model. This represents a document as a hierarchy of objects. The DOM can be loaded-from or saved-to a file in JSON (JavaScript Object Notation) format. (See http://json.org.) In the future, support for XML and/or other formats may be added. More...

|

| |

| class | GDomClient |

| | This is a socket client that sends and receives DOM nodes. More...

|

| |

| class | GDomListIterator |

| | This class iterates over the items in a list node. More...

|

| |

| class | GDomNode |

| | Represents a single node in a DOM. More...

|

| |

| class | GDomServer |

| | This is a socket server that sends and receives DOM nodes. More...

|

| |

| class | GDoubleRect |

| | Represents a rectangular region with doubles. More...

|

| |

| class | GDynamicPageConnection |

| |

| class | GDynamicPageServer |

| |

| class | GDynamicPageSession |

| |

| class | GDynamicPageSessionExtension |

| |

| class | GEmpiricalGradientDescent |

| | This algorithm does a gradient descent by feeling a small distance out in each dimension to measure the gradient. For efficiency reasons, it only measures the gradient in one dimension (which it cycles round-robin style) per iteration and uses the remembered gradient in the other dimensions. More...

|

| |

| class | GEnsemble |

| | This is a base-class for ensembles that combine the predictions from multiple weighed models. More...

|

| |

| class | GEuclidSimilarity |

| | This computes the reciprocal of Euclidean distance, where all missing values are simply ignored. More...

|

| |

| class | GEvolutionaryOptimizer |

| | Uses an evolutionary process to optimize a vector. More...

|

| |

| class | GExpectException |

| | Instantiating an object of this class specifies that any exceptions thrown during the life of this object should be treated as "expected". More...

|

| |

| class | GExtendedKalmanFilter |

| | This is an implementation of the Extended Kalman Filter. This class is used by alternately calling advance and correct. More...

|

| |

| class | GFeatureFilter |

| |

| class | GFile |

| | Contains some useful routines for manipulating files. More...

|

| |

| class | GFilter |

| |

| class | GFloatRect |

| | Represents a rectangular region with floats. More...

|

| |

| class | GFloydWarshall |

| | Computes the shortest-cost path between all pairs of vertices in a graph. Takes O(n^3) time. More...

|

| |

| class | GFolderDeserializer |

| | This class complements GFolderSerializer. More...

|

| |

| class | GFolderSerializer |

| | This turns a file or a folder (and its contents recursively) into a stream of bytes. More...

|

| |

| class | GFourier |

| | Fourier transform. More...

|

| |

| class | GFourierWaveProcessor |

| | This is an abstract class that processes a wave file in blocks. Specifically, it divides the wave file up into overlapping blocks, converts them into Fourier space, calls the abstract "process" method with each block, converts back from Fourier space, and then interpolates to create the wave output. More...

|

| |

| class | GFunction |

| | This class represents a math function. (It might be used, for example, in a plotting tool.) More...

|

| |

| class | GFunctionParser |

| | This class parses math equations. (This is useful, for example, for plotting tools.) More...

|

| |

| class | GFuzzyKMeans |

| | A K-means clustering algorithm where every point has partial membership in each cluster. This algorithm is specified in Li, D. and Deogun, J. and Spaulding, W. and Shuart, B., Towards missing data imputation: A study of fuzzy K-means clustering method, In Rough Sets and Current Trends in Computing, Springer, pages 573–579, 2004. More...

|

| |

| class | GGammaDistribution |

| | The Gamma distribution. More...

|

| |

| class | GGaussianProcess |

| | A Gaussian Process model. This class was implemented according to the specification in Algorithm 2.1 on page 19 of chapter 2 of http://www.gaussianprocesses.org/gpml/chapters/ by Carl Edward Rasmussen and Christopher K. I. Williams. More...

|

| |

| class | GGraphCut |

| | This implements an optimized max-flow/min-cut algorithm described in "An experimental comparison of min-cut/max-flow algorithms for energy minimization in vision" by Boykov, Y. and Kolmogorov, V. This implementation assumes that edges are undirected. More...

|

| |

| class | GGraphCutTransducer |

| | A transduction algorithm that uses a max-flow/min-cut graph-cut algorithm to partition the data until each class is in a separate cluster. Unlabeled points are then assigned the label of the cluster in which they fall. More...

|

| |

| class | GGraphEdgeIterator |

| | Iterates over the edges that connect to the specified node. More...

|

| |

| class | GGridSearch |

| | This performs a brute-force grid search with uniform sampling over the unit hypercube with increasing granularity. (Your target function should scale the candidate vectors as necessary to cover the desired space.) This grid-search increases the granularity after each pass, and carefully avoids sampling anywhere that it has sampled before. More...

|

| |

| class | GHashTable |

| | Implements a typical hash table. (It doesn't take ownership of the objects you add, so you must still delete them yourself.) More...

|

| |

| class | GHashTableBase |

| | The base class of hash tables. More...

|

| |

| class | GHashTableEnumerator |

| | This class iterates over the values in a hash table. More...

|

| |

| class | GHeap |

| | Provides a heap in which to put strings or whatever you need to store. If you need to allocate space for a lot of small objects, it's much more efficient to use this class than the C++ heap. Plus, you can delete them all by simply deleting the heap. You can't, however, reuse the space for individual objects in this heap. More...

|

| |

| class | GHiddenMarkovModel |

| |

| class | GHillClimber |

| | In each dimension, tries 5 candidate adjustments: a lot smaller, a little smaller, same spot, a little bigger, and a lot bigger. If it picks a smaller adjustment, the step size in that dimension is made smaller. If it picks a bigger adjustment, the step size in that dimension is made bigger. More...

|

| |

| class | GHistogram |

| | Gathers values and puts them in bins. More...

|

| |

| class | GHtml |

| | This class is for parsing HTML files. It's designed to be very simple. This class might be useful, for example, for building a web-crawler or for extracting readable text from a web page. More...

|

| |

| class | GHttpClient |

| | This class allows you to get files using the HTTP protocol. More...

|

| |

| class | GHttpConnection |

| | Each GHttpConnection represents an HTTP connection between a client and the server. A GHTTPServer is a collection of GHTTPConnection objects. To implement a HTTP server, you will typically override the doGet and doPost methods of this class to generate web pages. More...

|

| |

| class | GHttpMultipartParser |

| |

| class | GHttpParamParser |

| | A class for parsing the name/value pairs that follow the "?" in a URL. More...

|

| |

| class | GHttpServer |

| | This class allows you to implement a simple HTTP daemon. More...

|

| |

| class | GIdentityFunction |

| | This is an implementation of the identity function. It might be useful, for example, as the observation function in a GRecurrentModel if you want to create a Jordan network. More...

|

| |

| class | GImage |

| | Represents an image. More...

|

| |

| class | GImputeMissingVals |

| |

| class | GIncrementalLearner |

| | This is the base class of supervised learning algorithms that can learn one row at a time. More...

|

| |

| class | GIncrementalLearnerQAgent |

| | This is an implementation of GQLearner that uses an incremental learner for its Q-table and a SoftMax (usually pick the best action, but sometimes randomly pick the action) strategy to balance between exploration vs exploitation. To use this class, you need to supply an incremental learner (see the comment for the constructor for more details) and to implement the GetRewardForLastAction method. More...

|

| |

| class | GIncrementalTransform |

| | This is the base class of algorithms that can transform data one row at a time without supervision. More...

|

| |

| class | GIncrementalTransformChainer |

| | This wraps two two-way-incremental-transforms to form a single combination transform. More...

|

| |

| class | GIndexedMultiSet |

| | A multiset class that can be queried by index. It is implemented using a balanced tree structure, so most operations take O(log(n)) time. More...

|

| |

| class | GIndexVec |

| | Useful functions for operating on vectors of indexes. More...

|

| |

| class | GInstanceRecommender |

| | This class makes recommendations by finding the nearest-neighbors (as determined by evaluating only overlapping ratings), and assuming that the ratings of these neighbors will be predictive of your ratings. More...

|

| |

| class | GInstanceTable |

| | This represents a grid of values. It might be useful as a Q-table with Q-learning. More...

|

| |

| class | GInverseGammaDistribution |

| | The inverse Gamma distribution. More...

|

| |

| class | GIsomap |

| | Isomap is a manifold learning algorithm that uses the Floyd-Warshall algorithm to compute an estimate of the geodesic distance between every pair of points using local neighborhoods, and then uses classic multidimensional scaling to compute a low-dimensional projection. More...

|

| |

| class | GKdTree |

| | An efficient algorithm for finding neighbors. More...

|

| |

| class | GKernel |

| | The base class for kernel functions. Classes which implement this must provide an "apply" method that applies the kernel to two vectors. Kernels may be combined together to form a more complex kernel, to which the kernel trick will still apply. More...

|

| |

| class | GKernelAdd |

| | An addition kernel. More...

|

| |

| class | GKernelChiSquared |

| | Chi Squared kernel. More...

|

| |

| class | GKernelDistance |

| | Returns 1 minus the cosine of the angle between the two vectors with the origin. More...

|

| |

| class | GKernelExp |

| | The Exponential kernel. More...

|

| |

| class | GKernelGaussianRBF |

| | A Gaussian RBF kernel. More...

|

| |

| class | GKernelIdentity |

| | The identity kernel. More...

|

| |

| class | GKernelMultiply |

| | A multiplication kernel. More...

|

| |

| class | GKernelNormalize |

| | A Normalizing kernel. More...

|

| |

| class | GKernelPolynomial |

| | A polynomial kernel. More...

|

| |

| class | GKernelPow |

| | A power kernel. More...

|

| |

| class | GKernelScale |

| | A scalar kernel. More...

|

| |

| class | GKernelTranslate |

| | A translation kernel. More...

|

| |

| class | GKeyPair |

| |

| class | GKMeans |

| | An implementation of the K-means clustering algorithm. More...

|

| |

| class | GKMeansSparse |

| | An implementation of the K-means clustering algorithm. More...

|

| |

| class | GKMedoids |

| | An implementation of the K-medoids clustering algorithm. More...

|

| |

| class | GKMedoidsSparse |

| | An implementation of the K-medoids clustering algorithm for sparse data. More...

|

| |

| class | GKNN |

| | The k-Nearest Neighbor learning algorithm. More...

|

| |

| class | GLabelFilter |

| |

| class | GLayer |

| | GNeuralNet contains GLayers stacked upon each other. GLayer contains GBlocks concatenated beside each other. (GNeuralNet is a type of GBlock.) Each GBlock is an array of differentiable network units (artificial neurons). The user must add at least one GBlock to each GLayer. More...

|

| |

| class | GLearnerLib |

| | Provides some useful functions for instantiating learning algorithms from the command line. More...

|

| |

| class | GLearnerLoader |

| | This class is for loading various learning algorithms from a DOM. When any learning algorithm is saved, it calls baseDomNode, which creates (among other things) a field named "class" which specifies the class name of the algorithm. This class contains methods that will recognize any of the classes in this library and load them. If it doesn't recognize a class, it will either return NULL or throw and exception, depending on the flags you pass to the constructor. Obviously this loader won't recognize any classes that you make. Therefore, you should overload the corresponding method in this class with a new method that will first recognize and load your classes, and then call these methods to handle other types. More...

|

| |

| class | GLinearDistribution |

| | A linear regression model that predicts a distribution. (This algorithm also differs from GLinearRegressor in that it computes its model in closed form instead of using a numerical approach to find it. In general, GLinearRegressor seems to be a bit more accurate. Perhaps the method used in this algorithm is not very numerically stable.) More...

|

| |

| class | GLinearProgramming |

| |

| class | GLinearRegressor |

| | A linear regression model. Let f be a feature vector of real values, and let l be a label vector of real values, then this model estimates l=Bf+e, where B is a matrix of real values, and e is a vector of real values. (In the Wikipedia article on linear regression, B is called "beta", and e is called "epsilon". The approach used by this model to compute beta and epsilon, however, is much more efficient than the approach currently described in that article.) More...

|

| |

| class | GLLE |

| | Locally Linear Embedding is a manifold learning algorithm that uses sparse matrix techniques to efficiently compute a low-dimensional projection. More...

|

| |

| class | GLNormDistance |

| | Interpolates between manhattan distance (norm=1), Euclidean distance (norm=2), and Chebyshev distance (norm=infinity). For nominal attributes, Hamming distance is used. More...

|

| |

| class | GLogify |

| | This transform converts continuous values into logarithmic space. More...

|

| |

| class | GManifold |

| | This class stores static methods that are useful for manifold learning. More...

|

| |

| class | GManifoldSculpting |

| | Manifold Sculpting. A non-linear dimensionality reduction algorithm. (See Gashler, Michael S. and Ventura, Dan and Martinez, Tony. Iterative non-linear dimensionality reduction with manifold sculpting. In Advances in Neural Information Processing Systems 20, pages 513–520, MIT Press, Cambridge, MA, 2008.) More...

|

| |

| class | GMasterThread |

| | Manages a pool of GWorkerThread objects. To use this class, first call addWorker one or more times. Then, call doJobs. More...

|

| |

| class | GMath |

| | Provides some useful math functions. More...

|

| |

| class | GMatrix |

| | Represents a matrix or a database table. More...

|

| |

| class | GMatrixFactorization |

| | This factors the sparse matrix of ratings, M, such that M = PQ^T where each row in P gives the principal preferences for the corresponding user, and each row in Q gives the linear combination of those preferences that map to a rating for an item. (Actually, P and Q also contain an extra column added for a bias.) This class is implemented according to the specification on page 631 in Takacs, G., Pilaszy, I., Nemeth, B., and Tikk, D. Scalable collaborative filtering approaches for large recommender systems. The Journal of Machine Learning Research, 10:623–656, 2009. ISSN 1532-4435., except with the addition of learning-rate decay and a different stopping criteria. More...

|

| |

| class | GMaxPooling2D |

| |

| class | GMeanMarginsTree |

| | A GMeanMarginsTree is an oblique decision tree specified in Gashler, Michael S. and Giraud-Carrier, Christophe and Martinez, Tony. Decision Tree Ensemble: Small Heterogeneous Is Better Than Large Homogeneous. In The Seventh International Conference on Machine Learning and Applications, Pages 900 - 905, ICMLA '08. 2008. It divides features as follows: It finds the mean and principle component of the output vectors. It divides all the vectors into two groups, one that has a positive dot-product with the principle component (after subtracting the mean) and one that has a negative dot-product with the principle component (after subtracting the mean). Next it finds the average input vector for each of the two groups. Then it finds the mean and principle component of those two vectors. The dividing criteria for this node is to subtract the mean and then see whether the dot-product with the principle component is positive or negative. More...

|

| |

| class | GMergeDataHolder |

| | This class guarantees that the rows in b are merged vertically back into a when this object goes out of scope. More...

|

| |

| class | GMinBinSearch |

| | This is a hill climber for semi-linear error surfaces that minimizes testing with an approach like binary-search. It only searches approximately within the unit cube (although it may stray a little outside of it). It is the target function's responsibility to map this into an appropriate space. More...

|

| |

| class | GMixedRelation |

| |

| class | GMixtureOfGaussians |

| | This class uses Expectency Maximization to find the mixture of Gaussians that best approximates the data in a specified real attribute of a data set. More...

|

| |

| class | GMomentumGreedySearch |

| | At each iteration this algorithm moves in only one dimension. If the situation doesn't improve it tries the opposite direction. If both directions are worse, it decreases the step size for that dimension, otherwise it increases the step size for that dimension. More...

|

| |

| class | GMultivariateNormalDistribution |

| | A multivariate Normal distribution. It can compute the likelihood of a specified vector, and can also generate random vectors from the distribution. More...

|

| |

| class | GNaiveBayes |

| | A naive Bayes classifier. More...

|

| |

| class | GNaiveInstance |

| | This is an instance-based learner. Instead of finding the k-nearest neighbors of a feature vector, it finds the k-nearst neighbors in each dimension. That is, it finds n*k neighbors, considering each dimension independently. It then combines the label from all of these neighbors to make a prediction. Finding neighbors in this way makes it more robust to high-dimensional datasets. It tends to perform worse than k-nn in low-dimensional space, and better than k-nn in high-dimensional space. (It may be thought of as a cross between a k-nn instance learner and a Naive Bayes learner. It only supports continuous features and labels (so it is common to wrap it in a Categorize filter which will convert nominal features to a categorical distribution of continuous values). More...

|

| |

| class | GNeighborFinder |

| | Finds the k-nearest neighbors of any vector in a dataset. More...

|

| |

| class | GNeighborFinderGeneralizing |

| | Finds the k-nearest neighbors (in a dataset) of an arbitrary vector (which may or may not be in the dataset). More...

|

| |

| class | GNeighborGraph |

| | This wraps a neighbor finding algorithm. It caches the queries for neighbors for the purpose of improving runtime performance. More...

|

| |

| class | GNeighborTransducer |

| | An instance-based transduction algorithm. More...

|

| |

| class | GNeuralDecomposition |

| |

| class | GNeuralNet |

| | GNeuralNet contains GLayers stacked upon each other. GLayer contains GBlocks concatenated beside each other. (GNeuralNet is a type of GBlock, so you can nest.) Each GBlock is an array of differentiable network units (artificial neurons). The user must add at least one GBlock to each GLayer. More...

|

| |

| class | GNeuralNetLearner |

| | A thin wrapper around a GNeuralNet that implements the GIncrementalLearner interface. More...

|

| |

| class | GNeuralNetOptimizer |

| | Optimizes the parameters of a differentiable function using an objective function. More...

|

| |

| class | GNeuralNetTargetFunction |

| | A class that facilitates training a neural network with an arbitrary optimization algorithm. More...

|

| |

| class | GNodeHashTable |

| | This is a hash table that uses any object which inherits from HashTableNode as the key. More...

|

| |

| class | GNoiseGenerator |

| | Just generates Gaussian noise. More...

|

| |

| class | GNominalToCat |

| | This is sort-of the opposite of discretize. It converts each nominal attribute to a categorical distribution by representing each value using the corresponding row of the identity matrix. For example, if a certain nominal attribute has 4 possible values, then a value of 3 would be encoded as the vector 0 0 1 0. When predictions are converted back to nominal values, the mode of the categorical distribution is used as the predicted value. (This is similar to Weka's NominalToBinaryFilter.) More...

|

| |

| class | GNormalDistribution |

| | This is the Normal (a.k.a. Gaussian) distribution. More...

|

| |

| class | GNormalize |

| | This transform scales and shifts continuous values to make them fall within a specified range. More...

|

| |

| class | GNurbs |

| | NURBS = Non Uniform Rational B-Spline Periodic = closed loop. More...

|

| |

| class | GObjective |

| | A loss function used to train a differentiable function. More...

|

| |

| class | GOptimizer |

| | This is the base class of all search algorithms that can jump to any vector in the search space seek the vector that minimizes error. More...

|

| |

| class | GOptimizerBasicTestTargetFunction |

| |

| class | GOverrunSentinel |

| | Placing these on the stack can help catch buffer overruns. More...

|

| |

| class | GPackageClient |

| | This class abstracts a client that speaks a home-made protocol that guarantees packages will arrive in the same order and size as when they were sent. This protocol is a simple layer on top of TCP. More...

|

| |

| class | GPackageConnection |

| | This is a helper class used by GPackageServer. If you implement a custom connection object for a sub-class of GPackageServer, then it should inherrit from this class. More...

|

| |

| class | GPackageConnectionBuf |

| | This is a helper class used by GPackageConnection. More...

|

| |

| class | GPackageServer |

| | This class abstracts a server that speaks a home-made protocol that guarantees packages will arrive in the same order and size as when they were sent. This protocol is a simple layer on top of TCP. More...

|

| |

| class | GPairProduct |

| | Generates data by computing the product of each pair of attributes. This is useful for augmenting data. More...

|

| |

| class | GParallelOptimizers |

| | This class simplifies simultaneously solving several optimization problems. More...

|

| |

| class | GParticleSwarm |

| | An optimization algorithm inspired by flocking birds. More...

|

| |

| class | GPassiveConsole |

| | This class provides a non-blocking method for reading characters from stdin. (If there are no characters ready in stdin, it immediately returns '\0'.) The constructor sets flags on the console so that it passes characters to the stream immediately (instead of when Enter is pressed), and so that it doesn't echo the keys (if desired), and it makes stdin non-blocking. The destructor puts all those things back the way they were. More...

|

| |

| class | GPCA |

| | Principal Component Analysis. (Computes the principal components about the mean of the data when you call train. The transformed (reduced-dimensional) data will have a mean about the origin.) More...

|

| |

| class | GPearsonCorrelation |

| | This is a similarity metric that computes the Pearson correlation between two sparse vectors. More...

|

| |

| class | GPipe |

| | This class wraps the handle of a pipe. It closes the pipe when it is destroyed. This class is useful in conjunction with GApp::systemExecute for reading from, or writing to, the standard i/o streams of a child process. More...

|

| |

| class | GPlotLabelSpacer |

| | If you need to place grid lines or labels at regular intervals (like 1000, 2000, 3000, 4000... or 20, 25, 30, 35... or 0, 2, 4, 6, 8, 10...) this class will help you pick where to place the labels so that there are a reasonable number of them, and they all land on nice label values. More...

|

| |

| class | GPlotLabelSpacerLogarithmic |

| | Similar to GPlotLabelSpacer, except for logarithmic grids. To plot in logarithmic space, set your plot window to have a range from log_e(min) to log_e(max). When you actually plot things, plot them at log_e(x), where x is the position of the thing you want to plot. More...

|

| |

| class | GPlotWindow |

| | This class makes it easy to plot points and functions on 2D cartesian coordinates. More...

|

| |

| class | GPoissonDistribution |

| | The Poisson distribution. More...

|

| |

| class | GPolicyLearner |

| | This is the base class for algorithms that learn a policy. More...

|

| |

| class | GPolynomial |

| | This regresses a multi-dimensional polynomial to fit the data. More...

|

| |

| class | GPrediction |

| | This class is used to represent the predicted distribution made by a supervised learning algorithm. (It is just a shallow wrapper around GDistribution.) It is used in conjunction with calls to GSupervisedLearner::predictDistribution. The predicted distributions will be either categorical distributions (for nominal values) or Normal distributions (for continuous values). More...

|

| |

| class | GPriorityQueue |

| | An implementation of a double-ended heap-based priority queue. (Note that the multimap STL class can also be used to implement a double-ended priority queue, but the STL does not currently provide a heap-based double-ended priority queue, which is asymptotically more efficient for insertions.) More...

|

| |

| class | GPriorityQueueEntry |

| | An internal class used by GPriorityQueue. You should not use this class directly. More...

|

| |

| class | GProbeSearch |

| | This is somewhat of a multi-dimensional version of binary-search. It greedily probes the best choices first, but then starts trying the opposite choices at the higher divisions so that it can also handle non-monotonic target functions. Each iteration performs a binary (divide-and-conquer) search within the unit hypercube. (Your target function should scale the candidate vectors as necessary to cover the desired space.) Because the high-level divisions are typically less correlated with the quality of the final result than the low-level divisions, it searches through the space of possible "probes" by toggling choices in the order from high level to low level. In low-dimensional space, this algorithm tends to quickly find good solutions, especially if the target function is somewhat smooth. In high-dimensional space, the number of iterations to find a good solution seems to grow exponentially. More...

|

| |

| class | GProgressEstimator |

| | This class is used with big loops to estimate the wall-clock time until completion. It works by computing the running median of the duration of recent iterations, and projecting that duration across the remaining iterations. More...

|

| |

| class | GQLearner |

| | The base class of a Q-Learner. To use this class, there are four abstract methods you'll need to implement. See also the comment for GPolicyLearner. More...

|

| |

| class | GRand |

| | This is a 64-bit pseudo-random number generator. More...

|

| |

| class | GRandomDirectionBinarySearch |

| | This algorithm picks a random direction, then uses binary search to determine how far to step, and repeats. More...

|

| |

| class | GRandomForest |

| |

| class | GRandomIndexIterator |

| | This class iterates over all the integer values from 0 to length-1 in random order. More...

|

| |

| class | GRandomSearch |

| | At each iteration, this tries a random vector from the unit hypercube. (Your target function should scale the candidate vectors as necessary to cover the desired space.) More...

|

| |

| class | GRayTraceAreaLight |

| | Represents a light source with area. More...

|

| |

| class | GRayTraceBoundingBoxBase |

| | A class used for making ray-tracing faster. More...

|

| |

| class | GRayTraceBoundingBoxInterior |

| | A class used for making ray-tracing faster. More...

|

| |

| class | GRayTraceBoundingBoxLeaf |

| | A class used for making ray-tracing faster. More...

|

| |

| class | GRayTraceCamera |

| | Represents the camera for a ray tracing scene. More...

|

| |

| class | GRayTraceColor |

| | This class represents a color. It's more precise than GColor, but takes up more memory. Note that the ray tracer ignores the alpha channel because the material specifies a unique transmission color. More...

|

| |

| class | GRayTraceDirectionalLight |

| | Represents directional light in a ray-tracing scene. More...

|

| |

| class | GRayTraceImageTexture |

| |

| class | GRayTraceLight |

| | Represents a source of light in a ray-tracing scene. More...

|

| |

| class | GRayTraceMaterial |

| |

| class | GRayTraceObject |

| | An object in a ray-tracing scene. More...

|

| |

| class | GRayTracePhysicalMaterial |

| | Represents the material of which an object is made in a ray-tracing scene. More...

|

| |

| class | GRayTracePointLight |

| | Represents a point light in a ray-tracing scene. More...

|

| |

| class | GRayTraceScene |

| | Represents a scene that you can ray-trace. More...

|

| |

| class | GRayTraceSphere |

| | A sphere in a ray-tracing scene. More...

|

| |

| class | GRayTraceTriangle |

| | A single triangle in a ray-tracing scene. More...

|

| |

| class | GRayTraceTriMesh |

| | Represents a triangle mesh in a ray-tracing scene. More...

|

| |

| class | GRecommenderLib |

| |

| class | GRect |

| | Represents a rectangular region with integers. More...

|

| |

| class | GRegionAjacencyGraph |

| | The base class for region ajacency graphs. These are useful for breaking down an image into patches of similar color. More...

|

| |

| class | GRegionAreaIterator |

| | Iterates over all the pixels in an image that have the same color and are transitively adjacent. In other words, if you were to flood-fill a the specified point, this returns all the pixels that would be changed. More...

|

| |

| class | GRegionBorderIterator |

| | Iterates the border of a 2D region by running around the border and reporting the coordinates of each interior border pixel and the direction to the edge. It goes in a counter-clockwise direction. More...

|

| |

| class | GRelation |

| | Holds the metadata for a dataset. More...

|

| |

| class | GRelationalElement |

| |

| class | GRelationalRow |

| |

| class | GRelationalTable |

| | See GTree.cpp for an example of how to use this class. More...

|

| |

| class | GReleaseDataHolder |

| | This is a special holder that guarantees the data set will release all of its data before it is deleted. More...

|

| |

| class | GResamplingAdaBoost |

| | This is an implementation of AdaBoost, except instead of using weighted samples, it resamples the training set by giving each sample a probability proportional to its weight. This difference enables it to work with algorithms that do not support weighted samples. More...

|

| |

| class | GReservoir |

| | This transforms data by passing it through a multi-layer perceptron with randomely-initialized weights. (This transform automatically converts nominal attributes to categorical as necessary, but it does not normalize. In other words, it assumes that all continuous attributes fall approximately within a 0-1 range.) More...

|

| |

| class | GReservoirNet |

| | This model uses a randomely-initialized network to map the inputs into a higher-dimensional space, and it uses a layer of perceptrons to learn in this augmented space. More...

|

| |

| class | GRMSPropOptimizer |

| | Trains a neural network with RMS-prop. More...

|

| |

| class | GRowDistance |

| | This uses a combination of Euclidean distance for continuous attributes, and Hamming distance for nominal attributes. In particular, for each attribute, it calculates pA[i]-pB[i], squares it and takes the square root of that sum. For nominal attributes pA[i]-pB[i] is 0 if they are the same and 1 if they are different. More...

|

| |

| class | GRunningCovariance |

| | Computes a running covariance matrix about the origin. More...

|

| |

| class | GSampleClimber |

| | This is a variant of empirical gradient descent that tries to estimate the gradient using a minimal number of samples. It is more efficient than empirical gradient descent, but it only works well if the optimization surface is quite locally linear. More...

|

| |

| class | GScalingUnfolder |

| | This is a nonlinear dimensionality reduction algorithm loosely inspired by Maximum Variance Unfolding. It iteratively scales up the data, then restores distances between neighbors. More...

|

| |

| class | GSDL |

| | A collection of routines that are useful when interfacing with SDL. More...

|

| |

| class | GSelfOrganizingMap |

| | An implementation of a Kohonen self-organizing map. More...

|

| |

| class | GSGDOptimizer |

| | Trains a neural network by stochastic gradient descent. More...

|

| |

| class | GShortcutPruner |

| | This uses "betweeenness centrality" to find the shortcuts in a table of neighbors and replaces them with INVALID_INDEX. More...

|

| |

| class | GSignalHandler |

| | Temporarily handles certain signals. (When this object is destroyed, it puts all the signal handlers back the way they were.) Periodically call "check" to see if a signal has occurred. More...

|

| |

| class | GSimpleAssignment |

| | A simple concrete implementation of the GAssignment protocol using std::vector<int> More...

|

| |

| class | GSimplePriorityQueue |

| | Implements a simple priority queue of objects sorted by a double-precision value. More...

|

| |

| class | GSmtp |

| | For sending email to an SMTP server. More...

|

| |

| class | GSoftImpulseDistribution |

| |

| class | GSparseClusterer |

| | This is a base class for clustering algorithms that operate on sparse matrices. More...

|

| |

| class | GSparseClusterRecommender |

| | This class clusters the rows according to a sparse similarity metric, then uses the baseline vector in each cluster to make predictions. More...

|

| |

| class | GSparseInstance |

| | An experimental instance-based learner that prunes attribute-values (or predicates) instead of entire instances. This may be viewed as a form of rule-learning that beings with instance-based learning and then prunes. More...

|

| |

| class | GSparseMatrix |

| | This class stores a row-compressed sparse matrix. That is, each row consists of a map from a column-index to a value. More...

|

| |

| class | GSparseNeighborFinder |

| | Finds neighbors by measuring the distance to all points using a sparse distance metric. More...

|

| |

| class | GSparseSimilarity |

| | The base class for similarity metrics that operate on sparse vectors. More...

|

| |

| class | GSparseVec |

| | Provides static methods for operating on sparse vectors. More...

|

| |

| class | GSpinLock |

| | On Windows, this implements a spin-lock. On Linux, this wraps pthread_mutex. More...

|

| |

| class | GSpinLockHolder |

| | This holder takes a lock (if it is non-NULL) when you construct it. It guarantees to release the lock when it is destroyed. More...

|

| |

| class | GSquaredError |

| | The default loss function is squared error. More...

|

| |

| class | GStemmer |

| | This class just wraps the Porter Stemmer. It finds the stems of words. Examples: "cats"->"cat" "dogs"->"dog" "fries"->"fri" "fishes"->"fish" "pies"->"pi" "lovingly"->"lovingli" "candy"->"candi" "babies"->"babi" "bus"->"bu" "busses"->"buss" "women"->"women" "hasty"->"hasti" "hastily"->"hastili" "fly"->"fly" "kisses"->"kiss" "goes"->"goe" "brought"->"brought" As you can see the stems aren't always real words, but that's okay as long as it produces the same stem for words that have the same etymological roots. Even then it still isn't perfect (notice it got "bus" wrong), but it should still improve analysis somewhat in many cases. More...

|

| |

| class | GStringChopper |

| | This class chops a big string at word breaks so you can display it intelligently on multiple lines. More...

|

| |

| class | GSubImageFinder |

| | This class uses Fourier phase correlation to efficiently find sub-images within a larger image. More...

|

| |

| class | GSubImageFinder2 |

| | This class uses heuristics to find sub-images within a larger image. It is slower, but more stable than GSubImageFinder. More...

|

| |

| class | GSupervisedLearner |

| | This is the base class of algorithms that learn with supervision and have an internal hypothesis model that allows them to generalize rows that were not available at training time. More...

|

| |

| class | GSVG |

| | This class simplifies plotting data to an SVG file. More...

|

| |

| class | GTargetFunction |

| | The optimizer seeks to find values that minimize this target function. More...

|

| |

| class | GTCPClient |

| | This class is an abstraction of a TCP client socket connection. More...

|

| |

| class | GTCPConnection |

| | This class is used by GTCPServer to represent a connection with one of the clients. (If you want to associate some additional objects with each connection, you can inherrit from this class, and overload GTCPServer::makeConnection to return your own custom object.) More...

|

| |

| class | GTCPServer |

| | This class is an abstraction of a TCP server, which maintains a set of socket connections. More...

|

| |

| class | GTempBufHelper |

| | A helper class used by the GTEMPBUF macro. More...

|

| |

| class | GThread |

| | A wrapper for PThreads on Linux and for some corresponding WIN32 api on Windows. More...

|

| |

| class | GTime |

| | Provides some time-related functions. More...

|

| |

| class | GTokenizer |

| | This is a simple tokenizer that reads a file, one token at-a-time. To use it, you should make a child class that defines several character sets. Example: More...

|

| |

| class | GTransducer |

| | This is the base class of supervised learning algorithms (that may or may not have an internal model allowing them to generalize rows that were not available at training time). Note that the literature typically refers to supervised learning algorithms that can't generalize (because they lack an internal hypothesis model) as "Semi-supervised". (You cannot generalize with a semi-supervised algorithm–you have to train again with the new rows.) More...

|

| |

| class | GTransform |

| | This is the base class of algorithms that transform data without supervision. More...

|

| |

| class | GTreeNode |

| | This is a helper class used by GIndexedMultiSet. More...

|

| |

| class | GTriMeshBuilder |

| |

| class | GUniformDistribution |

| | This is a continuous uniform distribution. More...

|

| |

| class | GUniformRelation |

| | A relation with a minimal memory footprint that assumes all attributes are continuous, or all of them are nominal and have the same number of possible values. More...

|

| |

| class | GUnivariateDistribution |

| | This is the base class for univariate distributions. More...

|

| |

| class | GVec |

| | Represents a mathematical vector of doubles. More...

|

| |

| class | GVecWrapper |

| | This class temporarily wraps a GVec around an array of doubles. You should take care to ensure this object is destroyed before the array it wraps. More...

|

| |

| class | GVocabulary |

| | This is a helper class which is useful for text-mining. It collects words, stems them, filters them through a list of stop-words, and assigns a discrete number to each word. More...

|

| |

| class | GWag |

| | This model trains several multi-layer perceptrons, then averages their weights together in an intelligent manner. More...

|

| |

| class | GWave |

| | Currently only supports PCM wave format. More...

|

| |

| class | GWaveIterator |

| | This class iterates over the samples in a WAVE file. Regardless of the bits-per-sample, this iterator will convert all samples to doubles with a range from -1 to 1. More...

|

| |

| class | GWavelet |

| | Wavelet transform. More...

|

| |

| class | GWebSocketClient |

| |

| class | GWeightedModel |

| | This is a helper-class used by GBag. More...

|

| |

| class | GWidget |

| | The base class of all GUI widgets. More...

|

| |

| class | GWidgetAnimation |

| | An image with multiple frames. More...

|

| |

| class | GWidgetAtomic |

| | The base class of all atomic widgets (widgets that are not composed of other widgets). More...

|

| |

| class | GWidgetBulletGroup |

| | This creates a whole group of bullets arranged either horizontally or vertically at regular intervals. More...

|

| |

| class | GWidgetBulletHole |

| | The easiest way to do bullets is to use the GWidgetBulletGroup class, but if you really want to manage individual bullets yourself, you can use this class to do it. More...

|

| |

| class | GWidgetCanvas |

| | A painting canvas. More...

|

| |

| class | GWidgetCheckBox |

| |

| class | GWidgetCommon |

| |

| class | GWidgetDialog |

| | A form or dialog. More...

|

| |

| class | GWidgetFileSystemBrowser |

| |

| class | GWidgetGrid |

| |

| class | GWidgetGroup |

| | The base class of all widgets that are composed of other widgets. More...

|

| |

| class | GWidgetGroupBox |

| | This just draws a rectangular box. More...

|

| |

| class | GWidgetHorizScrollBar |

| | Makes a horizontal scroll bar. More...

|

| |

| class | GWidgetHorizSlider |

| |

| class | GWidgetImageButton |

| | A button with an image on it. The left half of the image is the unpressed image and the right half is the pressed image. More...

|

| |

| class | GWidgetProgressBar |

| | Automatically determines wether to be horizontal or vertical based on dimensions. Progress ranges from 0 to 1, or from 0 to -1 if you want it to go the other way. More...

|

| |

| class | GWidgetSliderTab |

| | This widget is not meant to be used by itself. It creates one of the parts of a scroll bar or slider bar. More...

|

| |

| class | GWidgetTextBox |

| | This is a box in which the user can enter text. More...

|

| |

| class | GWidgetTextButton |

| | A button with text on it. More...

|

| |

| class | GWidgetTextLabel |

| | A text label. More...

|

| |

| class | GWidgetTextTab |

| | Represents a tab (like for tabbed menus, etc.) More...

|

| |

| class | GWidgetVCRButton |

| | A button with a common icon on it. More...

|

| |

| class | GWidgetVertScrollBar |

| | Makes a vertical scroll bar. More...

|

| |

| class | GWidgetVertSlider |

| |

| class | GWidgetWave |

| |

| class | GWordIterator |

| | This iterates over the words in a block of text. More...

|

| |

| class | GWordStats |

| | Stores statistics about each word in a GVocabulary. More...

|

| |

| class | GWorkerThread |

| | An abstract class for performing jobs. The idea is that you should be able to write the code to perform the jobs, then use it in either a serial or parallel manner. The class you write, that inherits from this one, will typically have additional constructor parameters that pass in any values or data necessary to define the jobs. More...

|

| |

| struct | HashBucket |

| | This is an internal structure used by GHashTable. More...

|

| |

| class | HashTableNode |

| | Objects used with GNodeHashTable should inherit from this class. They must implement two methods (to hash and compare the nodes). More...

|

| |

| class | Holder |

| | This class is very similar to the standard C++ class auto_ptr, except it throws an exception if you try to make a copy of it. This way, it will fail early if you use it in a manner that could result in non-deterministic behavior. (For example, if you create a vector of auto_ptrs, wierd things happen if an oom exception is thrown while resizing the buffer–part of the data will be lost when it reverts back to the original buffer. But if you make a vector of these, it will fail quickly, thus alerting you to the issue.) More...

|

| |

| class | OptimizerTargetFunc |

| |

| struct | PathData |

| | Helper struct to hold the results from GFile::ParsePath. More...

|

| |

| class | ShouldMaximize |

| | Tag class to indicate that the linearAssignment routine should maximize the cost of the assignment. More...

|

| |

| class | ShouldMinimize |

| | Tag class to indicate that the linearAssignment routine should minimize the cost of the assignment. More...

|

| |

| struct | strCmp |

| |

| struct | strComp |

| |

| class | VectorOfPointersHolder |

| | Deletes all of the pointers in a vector when this object goes out of scope. More...

|

| |

|

| int | _stricmp (const char *szA, const char *szB) |

| |

| int | _strnicmp (const char *szA, const char *szB, int len) |

| |

| unsigned int | abgrToArgb (unsigned int rgba) |

| |

| class GClasses::GRand | beta (double alpha, double beta) |

| |

| virtual std::size_t | binomial (std::size_t n, double p) |

| | Returns a random value from a binomial distribution This method draws n samples from a uniform distribution, so it is very slow for large values of n. binomial_approx is generally much faster. More...

|

| |

| virtual std::size_t | binomial_approx (std::size_t n, double p) |

| | Returns a random value approximately from a binomial distribution. This method uses a normal distribution to approximate the binomial distribution. It is O(1), and is generally quite accurate when n is large and p is not too close to 0 or 1. More...

|

| |

| virtual std::size_t | categorical (std::vector< double > &probabilities) |

| | Returns a random value from a categorical distribution with the specified vector of category probabilities. (Note: If you need to draw many values from a categorical distribution, the GCategoricalSampler and GCategoricalSamplerBatch classes are designed to do this more efficiently.) More...

|

| |

| virtual double | cauchy () |

| | Returns a random value from a standard Cauchy distribution. More...

|

| |

| virtual double | chiSquare (double t) |

| | Returns a random value from a chi-squared distribution. More...

|

| |

| int | ClipChan (int n) |

| |

| double | cost (const GSimpleAssignment &assign, const GMatrix &costs) |

| | Return the cost of the assignment assign for the matrix costs. More...

|

| |

| virtual void | dirichlet (double *pOutVec, const double *pParams, int dims) |