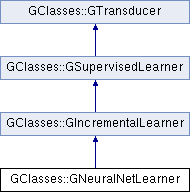

A thin wrapper around a GNeuralNet that implements the GIncrementalLearner interface.

|

| | GNeuralNetLearner () |

| |

| | GNeuralNetLearner (const GDomNode *pNode) |

| |

| virtual | ~GNeuralNetLearner () |

| |

| virtual bool | canImplicitlyHandleMissingFeatures () override |

| | See the comment for GTransducer::canImplicitlyHandleMissingFeatures. More...

|

| |

| virtual bool | canImplicitlyHandleNominalFeatures () override |

| | See the comment for GTransducer::canImplicitlyHandleNominalFeatures. More...

|

| |

| virtual bool | canImplicitlyHandleNominalLabels () override |

| | See the comment for GTransducer::canImplicitlyHandleNominalLabels. More...

|

| |

| virtual void | clear () override |

| | See the comment for GSupervisedLearner::clear. More...

|

| |

| GNeuralNet & | nn () |

| | Returns a reference to the neural net that this class wraps. More...

|

| |

| GNeuralNetOptimizer & | optimizer () |

| | Lazily creates an optimizer for the neural net that this class wraps, and returns a reference to it. More...

|

| |

| virtual void | predict (const GVec &in, GVec &out) override |

| | See the comment for GSupervisedLearner::predict. More...

|

| |

| virtual void | predictDistribution (const GVec &in, GPrediction *pOut) override |

| | See the comment for GSupervisedLearner::predictDistribution. More...

|

| |

| virtual GDomNode * | serialize (GDom *pDoc) const override |

| | Saves the model to a text file. More...

|

| |

| virtual bool | supportedFeatureRange (double *pOutMin, double *pOutMax) override |

| | See the comment for GTransducer::supportedFeatureRange. More...

|

| |

| virtual bool | supportedLabelRange (double *pOutMin, double *pOutMax) override |

| | See the comment for GTransducer::supportedFeatureRange. More...

|

| |

| virtual void | trainIncremental (const GVec &in, const GVec &out) override |

| | Pass a single input row and the corresponding label to incrementally train this model. More...

|

| |

| virtual void | trainSparse (GSparseMatrix &features, GMatrix &labels) override |

| | Train using a sparse feature matrix. (A Typical implementation of this method will first call beginIncrementalLearning, then it will iterate over all of the feature rows, and for each row it will convert the sparse row to a dense row, call trainIncremental using the dense row, then discard the dense row and proceed to the next row.) More...

|

| |

| | GIncrementalLearner () |

| | General-purpose constructor. More...

|

| |

| | GIncrementalLearner (const GDomNode *pNode) |

| | Deserialization constructor. More...

|

| |

| virtual | ~GIncrementalLearner () |

| | Destructor. More...

|

| |

| void | beginIncrementalLearning (const GRelation &featureRel, const GRelation &labelRel) |

| | You must call this method before you call trainIncremental. More...

|

| |

| void | beginIncrementalLearning (const GMatrix &features, const GMatrix &labels) |

| | A version of beginIncrementalLearning that supports data-dependent filters. More...

|

| |

| virtual bool | canTrainIncrementally () |

| | Returns true. More...

|

| |

| virtual bool | isFilter () |

| | Only the GFilter class should return true to this method. More...

|

| |

| | GSupervisedLearner () |

| | General-purpose constructor. More...

|

| |

| | GSupervisedLearner (const GDomNode *pNode) |

| | Deserialization constructor. More...

|

| |

| virtual | ~GSupervisedLearner () |

| | Destructor. More...

|

| |

| void | basicTest (double minAccuracy1, double minAccuracy2, double deviation=1e-6, bool printAccuracy=false, double warnRange=0.035) |

| | This is a helper method used by the unit tests of several model learners. More...

|

| |

| virtual bool | canGeneralize () |

| | Returns true because fully supervised learners have an internal model that allows them to generalize previously unseen rows. More...

|

| |

| void | confusion (GMatrix &features, GMatrix &labels, std::vector< GMatrix * > &stats) |

| | Generates a confusion matrix containing the total counts of the number of times each value was expected and predicted. (Rows represent target values, and columns represent predicted values.) stats should be an empty vector. This method will resize stats to the number of dimensions in the label vector. The caller is responsible to delete all of the matrices that it puts in this vector. For continuous labels, the value will be NULL. More...

|

| |

| void | precisionRecall (double *pOutPrecision, size_t nPrecisionSize, GMatrix &features, GMatrix &labels, size_t label, size_t nReps) |

| | label specifies which output to measure. (It should be 0 if there is only one label dimension.) The measurement will be performed "nReps" times and results averaged together nPrecisionSize specifies the number of points at which the function is sampled pOutPrecision should be an array big enough to hold nPrecisionSize elements for every possible label value. (If the attribute is continuous, it should just be big enough to hold nPrecisionSize elements.) If bLocal is true, it computes the local precision instead of the global precision. More...

|

| |

| const GRelation & | relFeatures () |

| | Returns a reference to the feature relation (meta-data about the input attributes). More...

|

| |

| const GRelation & | relLabels () |

| | Returns a reference to the label relation (meta-data about the output attributes). More...

|

| |

| double | sumSquaredError (const GMatrix &features, const GMatrix &labels, double *pOutSAE=NULL) |

| | Computes the sum-squared-error for predicting the labels from the features. For categorical labels, Hamming distance is used. More...

|

| |

| void | train (const GMatrix &features, const GMatrix &labels) |

| | Call this method to train the model. More...

|

| |

| virtual double | trainAndTest (const GMatrix &trainFeatures, const GMatrix &trainLabels, const GMatrix &testFeatures, const GMatrix &testLabels, double *pOutSAE=NULL) |

| | Trains and tests this learner. Returns sum-squared-error. More...

|

| |

| | GTransducer () |

| | General-purpose constructor. More...

|

| |

| | GTransducer (const GTransducer &that) |

| | Copy-constructor. Throws an exception to prevent models from being copied by value. More...

|

| |

| virtual | ~GTransducer () |

| |

| virtual bool | canImplicitlyHandleContinuousFeatures () |

| | Returns true iff this algorithm can implicitly handle continuous features. If it cannot, then the GDiscretize transform will be used to convert continuous features to nominal values before passing them to it. More...

|

| |

| virtual bool | canImplicitlyHandleContinuousLabels () |

| | Returns true iff this algorithm can implicitly handle continuous labels (a.k.a. regression). If it cannot, then the GDiscretize transform will be used during training to convert nominal labels to continuous values, and to convert nominal predictions back to continuous labels. More...

|

| |

| double | crossValidate (const GMatrix &features, const GMatrix &labels, size_t nFolds, double *pOutSAE=NULL, RepValidateCallback pCB=NULL, size_t nRep=0, void *pThis=NULL) |

| | Perform n-fold cross validation on pData. Returns sum-squared error. Uses trainAndTest for each fold. pCB is an optional callback method for reporting intermediate stats. It can be NULL if you don't want intermediate reporting. nRep is just the rep number that will be passed to the callback. pThis is just a pointer that will be passed to the callback for you to use however you want. It doesn't affect this method. if pOutSAE is not NULL, the sum absolute error will be placed there. More...

|

| |

| GTransducer & | operator= (const GTransducer &other) |

| | Throws an exception to prevent models from being copied by value. More...

|

| |

| GRand & | rand () |

| | Returns a reference to the random number generator associated with this object. For example, you could use it to change the random seed, to make this algorithm behave differently. This might be important, for example, in an ensemble of learners. More...

|

| |

| double | repValidate (const GMatrix &features, const GMatrix &labels, size_t reps, size_t nFolds, double *pOutSAE=NULL, RepValidateCallback pCB=NULL, void *pThis=NULL) |

| | Perform cross validation "nReps" times and return the average score. pCB is an optional callback method for reporting intermediate stats It can be NULL if you don't want intermediate reporting. pThis is just a pointer that will be passed to the callback for you to use however you want. It doesn't affect this method. if pOutSAE is not NULL, the sum absolute error will be placed there. More...

|

| |

| std::unique_ptr< GMatrix > | transduce (const GMatrix &features1, const GMatrix &labels1, const GMatrix &features2) |

| | Predicts a set of labels to correspond with features2, such that these labels will be consistent with the patterns exhibited by features1 and labels1. More...

|

| |

| void | transductiveConfusionMatrix (const GMatrix &trainFeatures, const GMatrix &trainLabels, const GMatrix &testFeatures, const GMatrix &testLabels, std::vector< GMatrix * > &stats) |

| | Makes a confusion matrix for a transduction algorithm. More...

|

| |

|

| virtual void | beginIncrementalLearningInner (const GRelation &featureRel, const GRelation &labelRel) override |

| | See the comment for GIncrementalLearner::beginIncrementalLearningInner. More...

|

| |

| virtual void | trainInner (const GMatrix &features, const GMatrix &labels) override |

| | See the comment for GIncrementalLearner::trainInner. More...

|

| |

| virtual void | beginIncrementalLearningInner (const GMatrix &features, const GMatrix &labels) |

| |

| GDomNode * | baseDomNode (GDom *pDoc, const char *szClassName) const |

| | Child classes should use this in their implementation of serialize. More...

|

| |

| size_t | precisionRecallContinuous (GPrediction *pOutput, double *pFunc, GMatrix &trainFeatures, GMatrix &trainLabels, GMatrix &testFeatures, GMatrix &testLabels, size_t label) |

| | This is a helper method used by precisionRecall. More...

|

| |

| size_t | precisionRecallNominal (GPrediction *pOutput, double *pFunc, GMatrix &trainFeatures, GMatrix &trainLabels, GMatrix &testFeatures, GMatrix &testLabels, size_t label, int value) |

| | This is a helper method used by precisionRecall. More...

|

| |

| void | setupFilters (const GMatrix &features, const GMatrix &labels) |

| | This method determines which data filters (normalize, discretize, and/or nominal-to-cat) are needed and trains them. More...

|

| |

| virtual std::unique_ptr< GMatrix > | transduceInner (const GMatrix &features1, const GMatrix &labels1, const GMatrix &features2) |

| | See GTransducer::transduce. More...

|

| |

Public Member Functions inherited from GClasses::GIncrementalLearner

Public Member Functions inherited from GClasses::GIncrementalLearner Public Member Functions inherited from GClasses::GSupervisedLearner

Public Member Functions inherited from GClasses::GSupervisedLearner Public Member Functions inherited from GClasses::GTransducer

Public Member Functions inherited from GClasses::GTransducer Static Public Member Functions inherited from GClasses::GSupervisedLearner

Static Public Member Functions inherited from GClasses::GSupervisedLearner Protected Member Functions inherited from GClasses::GIncrementalLearner

Protected Member Functions inherited from GClasses::GIncrementalLearner Protected Member Functions inherited from GClasses::GSupervisedLearner

Protected Member Functions inherited from GClasses::GSupervisedLearner Protected Attributes inherited from GClasses::GSupervisedLearner

Protected Attributes inherited from GClasses::GSupervisedLearner Protected Attributes inherited from GClasses::GTransducer

Protected Attributes inherited from GClasses::GTransducer Static Protected Member Functions inherited from GClasses::GSupervisedLearner

Static Protected Member Functions inherited from GClasses::GSupervisedLearner